AI Visibility Tracking for News Publishers: The 3 Layers That Matter

AI visibility tracking is quickly becoming one of the most talked-about topics in publishing right now, and also one of the biggest sources of concern. The problem is that we still do not have clear KPIs, consistent reporting, or widely accepted standards across platforms. Everyone feels the importance, but most teams are still asking the same questions: what exactly should we measure, and what does “good” even look like?

Over the last few months, I’ve gotten a steady stream of questions from editors, SEOs, and newsroom leaders about how I think AI visibility should work, what matters most, and where to focus first.

So I did what we all do when the rules are still being written: I spent the last couple of months investigating, tracking, and analyzing patterns across AI platforms and Google AI Overviews. Here’s my take on the most important layers of AI visibility tracking that actually matter for news publishers, and why.

- Layer 0: Access and eligibility, can AI systems crawl and use your content?

- Layer 1: Authority prompts, what you are known for inside AI platforms

- Layer 2: Trending visibility, what’s breaking right now in Google AI Overviews

- Layer 3: Inventory-based impact, how AI Overviews affect your actual newsroom output

Layer 0: Access and eligibility, can AI systems crawl and use your content?

Before measuring visibility, confirm the basics: are AI systems allowed to access and reference your content? If the wrong crawlers are blocked, your AI visibility can drop fast, even if your journalism is strong.

The key nuance: not all “AI crawlers” do the same job. Most publishers want to block training/data-collection bots, but allow search and retrieval bots that power discovery surfaces (Search and AI answers with links).

- Confirm crawl permissions: Review robots.txt and any CDN rules that may block AI-related bots.

- Separate indexing vs AI usage controls: Some controls apply to AI usage, not traditional search indexing.

- Align policy with business goals: Decide where you want visibility, licensing, or restrictions.

OpenAI, for example, separates bots for different purposes (training vs search). Anthropic also documents multiple bots with different uses. Google provides controls like Google-Extended for certain AI uses, but it is a product token and crawls may still occur via Google’s existing crawlers.

1) Confirm crawl permissions

Make sure you are not accidentally blocking the bots that help you show up in AI search experiences and cited answers.

Examples (robots.txt patterns): Block OpenAI model training bot, allow OpenAI search bot (so you can still be discovered and cited in ChatGPT search):

User-agent: GPTBot

Disallow: /

User-agent: OAI-SearchBot

Allow: /(These user agents and how they’re used are documented by OpenAI.)

Block Anthropic’s training crawler while keeping other access options aligned to your policy:

User-agent: ClaudeBot

Disallow: /(Anthropic describes ClaudeBot and other bots and how to control them.)

Also, double-check CDN/WAF rules (Cloudflare, Fastly, Akamai) that might block unknown bots even if robots.txt allows them.

2) Separate indexing vs AI usage controls

Some controls affect AI training or AI product usage, but do not necessarily affect standard search indexing, and vice versa.

Examples:

- Google Search indexing control: blocking Googlebot affects indexing in Google Search.

- Google-Extended: a control token intended to manage whether content can be used for certain Gemini-related AI uses (it’s not a separate crawler user agent). Google-Extended does NOT opt publishers out of AI Overviews (AIO) in Search.

- Applebot-Extended: This allows you to let Apple index your content for Siri/Spotlight search results while opting out of their generative model training.

# Keep Google Search indexing (generally) intact

User-agent: Googlebot

Allow: /

# Restrict certain Gemini-related AI uses via Google-Extended

User-agent: Google-Extended

Disallow: /Important: blocking Google-Extended prevents your content from being used in standalone Google AI products (like the Gemini chatbot or Vertex AI), but it does not prevent your content from appearing in AI Overviews (AIO) in Google Search. Currently, the only way to opt out of AIO is using nosnippet tags, which often negatively impacts your traditional organic traffic. Proceed with caution.

3) Align policy with business goals

Decide what you’re optimizing for, then set your crawler rules accordingly.

Common publisher goals and examples

- Goal A: “Do not train models on our content, but keep discovery and citations.”

Example approach: block training bots (GPTBot, ClaudeBot), allow search/retrieval bots (like OAI-SearchBot), and keep Google Search indexing accessible. - Goal B: “Allow everything for maximum reach.”

Example approach: allow all bots and focus on tracking citations and traffic impact rather than restrictions. - Goal C: “Lock down most AI usage unless licensed.”

Example approach: block training and retrieval bots broadly, then whitelist partners via contracts and technical allowlists (often enforced at CDN level in addition to robots.txt).

Layer 1: Authority prompts, what you are known for inside AI platforms

Start with the topics, entities, and beats you want to own, then test whether AI assistants associate your brand with those areas. This layer measures whether AI systems see your publisher as an authority, and whether they surface your brand when users ask broad, high-intent questions.

-

Step 1: Build your “authority set”, the topics you own (and want to own)

You can assemble this list from three reliable inputs:

- Your own demand (Google Search Console): pull your top head terms, the broad queries that consistently drive clicks and impressions to your site.

- Competitive demand (SEO tools): use your preferred SEO platform to identify the head terms driving traffic to key competitors, especially in your priority sections.

- AI perception check: ask an AI assistant questions like: “What topics is [brand] known for?” or “What is [domain.com] an authority on?” This reveals how the model currently categorizes your brand.

- Owned covered entities (content NLP): Analyze your published content with an entity extractor (for example Google Cloud Natural Language), then identify the entities you cover most frequently and most consistently. This helps you separate what you think you own from what your inventory actually reinforces.

The output of this step is a ranked list of 20 to 100 topics/entities that represent your authority footprint.

-

Step 2: Expand each topic into real user questions

For each topic, pull the most common question patterns users ask in Search. A practical starting point is People Also Ask style questions, because they represent natural-language intent and match how people phrase prompts in AI tools.

Examples:

- “What happened in…”

- “Why is…”

- “How does… work?”

- “What are the latest updates on…”

-

Step 3: Translate entities and topics into AI-native prompts

Next, ask AI platforms to generate the most popular, relevant ways people would ask about that topic inside an assistant.

Prompts tend to be more conversational and specific than classic search queries, for example:

- “Explain [topic] like I’m new to it”

- “Summarize what’s going on with [topic] in 5 bullet points”

- “What are the key developments this week in [topic]?”

- “What should I know about [topic] and why does it matter?”

This step helps you capture prompt formats that Search tools often miss.

-

Step 4: Track AI visibility across assistants

Now track whether your publisher shows up for those prompts, and how.

What to track:

- Brand mention or recommendation (are you referenced at all?)

- Citation / sourcing behavior (are you cited as a source, and is it linked?)

- Competitor comparison (who is being recommended instead of you?)

- Stability over time (does this change week to week?)

Done right, this layer becomes your “AI authority scoreboard”, it tells you where AI sees you as credible, and where you have an authority gap to close.

Example: BBC and World News

If the BBC wants to own World News and geopolitics, it should track prompts like:

- “What’s happening around the world today?”

- “Latest world news”

- “Most reliable sources for world news”

- “What’s happening in [country or region]?”

- “Explain the situation in [country]”

What to measure

- Brand association rate: how often your brand is mentioned or recommended for your authority areas

- Attribution quality: whether you are cited as a linked source vs just mentioned

- Consistency across models and markets: how stable the association is across assistants, countries, and languages

- Sentiment and framing: the tone and context when your brand is mentioned, for example trusted authority, neutral reference, or disputed/controversial, and whether AI positions you as the primary source, a secondary source, or a “background” citation

This layer tells you whether the AI ecosystem recognizes your newsroom as a trusted authority for the beats you care about, and where you have an authority gap to close.

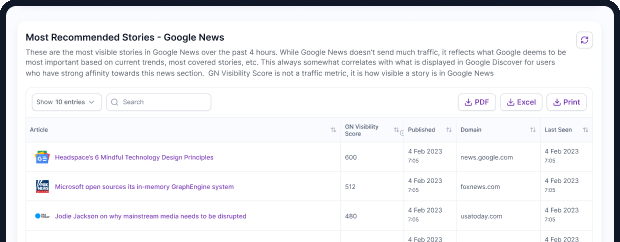

AI Visibility Tracking of (best tools to track visibility in ai platforms) - source: GDdash

Layer 2: Trending visibility, what’s breaking right now in Google AI Overviews

News visibility is won on rapidly changing trending stories. Publishers should track inclusion and citations across Google AI Overviews for trending queries in their priority sections, then benchmark which sources are being cited as stories evolve.

Continuing with the BBC example, at NewzDash we track whether publishers appear across: AI Overviews, Top Stories (News Box), Organic results, and All SERP features.

What to measure

- AIO presence rate on trends: how often trending queries trigger AI Overviews

- Share of voice in AIO: how often you are cited or included when AIO triggers

- Competitor benchmark: which publishers dominate citations as the story develops (tracked multiple times per day)

This layer helps you see who wins the AI summary layer during breaking news, even when rankings move quickly.

AI Overview presence rate for World News in US - Source: NewzDash

Top cited sites in AI Overview for World News trending queries in US - Source: NewzDash

Layer 3: Inventory-based impact, how AI Overviews affect your actual newsroom output

This is often the most important layer for publishers because it ties AI visibility to the stories you published today.

Process: If you publish around 100 stories per day, map each story to its primary target query and measure AI impact at scale.

- AI Overview trigger rate: what percent of your primary queries show AI Overviews (example: 20% of today’s story targets trigger AIOs)

- AI Overview visibility share: within those AIO-triggering queries, how often your publisher is cited or included in the AI Overview

This reflects AI Overview impact on your newsroom output, even if referrals from AIO are limited or inconsistent.

Nuance for publishers:

- AI Overviews in Search are usually more business-critical than “rankings” inside standalone AI platforms (ChatGPT, Gemini, etc.), because Search remains a major discovery surface for news.

- High visibility in AI Overviews does not always correlate with traffic growth. AIOs often answer the user's query directly (Zero-Click). Treat AIO citations as 'Brand Awareness' and 'Authority Signals' first, and referral traffic sources second."

Make it publisher-ready: attribution, volatility, and an action loop

1) Track attribution quality, not just “presence”

Presence without value can be misleading. For news publishers, track:

- Cited with a link vs cited without a link (brand mention only)

- Citation prominence: top citations vs buried citations

- URL quality: does the citation point to your canonical story, home/landing page, a syndicated copy, or an aggregator?

2) Measure with volatility in mind

AI Overviews can vary by location, timing, and query wording, especially during breaking news. Practical upgrades:

- Track priority queries different times per day (morning, midday, evening)

- Cluster query variants (for example: “what happened in X” vs “X latest” vs “X live updates”)

- Segment by country and language

- Keep a lightweight SERP evidence log: query, timestamp, AIO triggered or not, cited sources

AI answers change constantly. So the goal is usually not to obsess over the exact placement inside an AI Overview or an AI assistant response. Instead, track the direction over time: how frequently you are mentioned, cited, and linked across repeated checks.

3) Close the loop with actions editors can take

When your trending or inventory tracking shows you are consistently missing from AI Overviews on a topic you cover heavily:

- Identify which competitors are consistently cited, then compare format, freshness, and update cadence

- Decide the win condition for each story type: Top Stories inclusion, Organic ranking, AIO citation, or a mix

- Feed learnings into packaging: headline alignment, primary query targeting, explainers that AI can reference, and rapid updates when a story breaks

A simple dashboard that ties AI visibility to newsroom priorities

Publishers move faster when tracking connects to outcomes. A minimal dashboard that works:

- % of daily inventory whose primary queries trigger AIO

- Your citation share on those AIO-triggering queries

- Your Top Stories presence for the same set of queries

The goal is not to chase every AI mention. It is to build a repeatable system that tells you what you own, what you miss, and what to do next.